OctNet: Learning Deep 3D Representations at High Resolutions

2017

Conference Paper

avg

ps

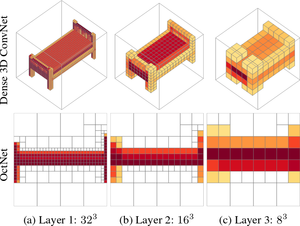

We present OctNet, a representation for deep learning with sparse 3D data. In contrast to existing models, our representation enables 3D convolutional networks which are both deep and high resolution. Towards this goal, we exploit the sparsity in the input data to hierarchically partition the space using a set of unbalanced octrees where each leaf node stores a pooled feature representation. This allows to focus memory allocation and computation to the relevant dense regions and enables deeper networks without compromising resolution. We demonstrate the utility of our OctNet representation by analyzing the impact of resolution on several 3D tasks including 3D object classification, orientation estimation and point cloud labeling.

| Author(s): | Gernot Riegler and Osman Ulusoy and Andreas Geiger |

| Book Title: | Proceedings IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017 |

| Pages: | 6620-6629 |

| Year: | 2017 |

| Month: | July |

| Day: | 21-26 |

| Publisher: | IEEE |

| Department(s): | Autonomous Vision, Perceiving Systems |

| Research Project(s): |

Learning Deep Representations of 3D

Efficient volumetric inference with OctNet Efficient and Scalable Inference |

| Bibtex Type: | Conference Paper (inproceedings) |

| Paper Type: | Conference |

| Event Name: | IEEE Conference on Computer Vision and Pattern Recognition (CVPR) |

| Event Place: | Honolulu, HI, USA |

| Address: | Piscataway, NJ, USA |

| ISBN: | 978-1-5386-0457-1 |

| ISSN: | 1063-6919 |

| Links: |

pdf

suppmat Project Page Video |

| Video: | |

|

BibTex @inproceedings{Riegler2017CVPR,

title = {OctNet: Learning Deep 3D Representations at High Resolutions},

author = {Riegler, Gernot and Ulusoy, Osman and Geiger, Andreas},

booktitle = {Proceedings IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017},

pages = {6620-6629},

publisher = {IEEE},

address = {Piscataway, NJ, USA},

month = jul,

year = {2017},

doi = {},

month_numeric = {7}

}

|

|